Context

The autonomous vehicle is one of the major challanges of tomorrow's mobility. In the near future users

will have access to fleets of shared autonomous vehicles.

The dynamics of autonomous

vehicles benefit the improvement of sensors.

Lidars are shrinking in size, their range is increasing, and their cost is decreasing. New emerging

technologies (solid-state Lidars) promise yet another miniaturization and to get rid of mechanical

parts for better integration into the vehicle.

As far as cameras are concerned, new technologies have emerged in recent years: time-of-flight cameras,

plenoptic cameras, omnidirectional cameras, and event cameras. These cameras seem promised

to a bright future because they allow you to obtain additional information to conventional cameras:

Depth information, multi-views, increased field of view, etc.

Objective

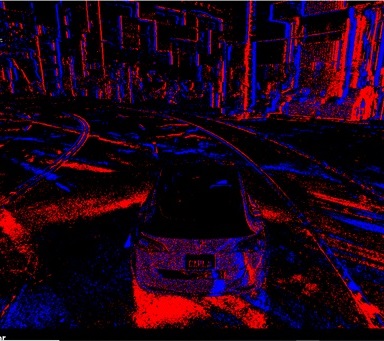

The main objective of this project is to enhance the operation of a technologically disruptive sensor with existing solutions for the perception of autonomous vehicles: the event camera. The event camera, also known as a neuromorphic camera or Dynamic Vision Sensor (DVS), seems to be particularly interesting for autonomous vehicles. It's a bio-inspired sensor that, instead of capturing static images - while scenes are dynamic - at a fixed frequency, measures changes in illumination at the pixel level and asynchronously. The properties of this camera are particularly interesting for the perception of objects and in difficult lighting conditions. In addition, the perception for the autonomous vehicle must be three-dimensional to be able to locate different entities (other vehicles, motorcycles, cyclists, pedestrians) and determine if there is a danger or if the situation is normal.

Approach

Several solutions will be explored to retrieve depth information: SLAM (Simultaneous Localization and

Mapping), DATMO (Detection and Tracking of Moving Obstacles) by event-based stereovision and multi-modal camera fusion

Events - Lidar.

A recognition stage will also be necessary to allow the autonomous vehicle to make the most appropriate

decision based on the situation. The most efficient approaches at the moment on conventional images (CNN, etc.) are not

adapted to the structure of the data provided by the event camera, so new approaches must be found.

Perception for the autonomous vehicle

Mobile Event Camera in a Dynamic Scene

Characteristic points detection and tracking

One of the first challenges is to find points of interest and associate them with signatures to be able to track them over time (feature detection and tracking). This task is essential for many computer vision algorithms: visual odometry, stereovision, optical flow, segmentation, and object recognition.

Multimodality camera-event/lidar

The current strategy of the various players in the field is to merge information from sensors with different modalities (cameras, lidar, radar). In this case, the information from these different modalities which can be of a totally different nature: 3D point clouds, 2D images, etc.

Object Recognition Using Event Cameras

Object recognition in computer vision has developed with deep learning methods. For event cameras, neural network architectures need to be rethought and adapted. We propose to develop new techniques merging convolutional spatial methods (CNN) with impulse methods (SNN).